Once your Python programs grow beyond basic scripts run from a command line, using print() statements for logging becomes a difficult practice to scale. Using print()logging modules enable you to better control where, how, and what you log, with much more granularity. As a result, you can reduce debugging time, improve code quality, and increase the visibility of your infrastructure.

To help you get up to speed with Python logging, we’re creating a multi-part guide to cover what you need to know to make your Python logging efficient, useful, and scalable. To get the most out of this guide, you should be comfortable with basic Python programming and understand general logging best practices.

In part one of our overview on Python logging, we’ll introduce you to the default logging module and log levels, and we’ll walk through basic examples of how you can get started with Python logging.

Learn More

Explore the complete Python Logging Guide series:

- Part 1: The Basics

- Part 2: Advanced Concepts

- Part 3: Centralized Python Logs

- Part 4: Logging with Django

Python’s default logging module

The first step in understanding Python logging is familiarizing yourself with the default logging module, which is included with Python’s standard library. The default logging module provides an easy-to-use framework for emitting log messages in a Python program. It’s simple enough that you can hit the ground running in a few minutes and extensible enough to cover a variety of use cases.

With the default Python logging module, you can:

- Create custom log messages with timestamps

- Emit logs to different destinations (such as the terminal, syslog, or systemd)

- Define the severity of log messages

- Format logs to meet different requirements

- Report error suppression without throwing an exception

- Capture the source of log messages

How does Python’s default logging module work?

At a high level, Python’s default logging module consists of these components:

- Loggers expose an interface that your code can use to log messages.

- Handlers send the logs created by loggers to their destination. Popular handlers include:

FileHandler:For sending log messages to a fileStreamHandler:For sending log messages to an output stream like stdoutSyslogHandler:For sending log messages to a syslog daemonHTTPHandler:For sending log messages with HTTP-

- Filters provide a mechanism to determine which logs are recorded.

- Formatters determine the output formatting of log messages.

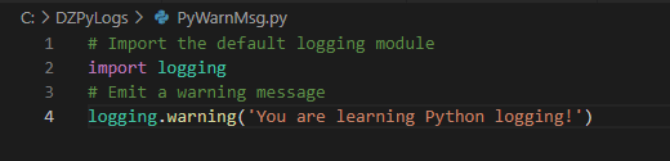

To use the default logger, just add import logging to your Python program, and then create a log message.

Here’s a basic example that uses the default logger (also known as the root logger):

Running that code will print this message to the console:

WARNING:root:You are learning Python logging!

In that example, we can see the default message format is as follows:

<SEVERITY>:<NAME>:<MESSAGE>

<NAME>is the name of our logger.

In many cases, we’ll want to modify how messages are formatted. We can call basicConfig() at the beginning of our code to customize formatting for the root logger.

For example, suppose we want to add a timestamp to our message. We can add %(asctime)s to a basicConfig() format call. To retain the rest of our original formatting, we’ll also need to include %(levelname)s:%(name)s:%(message)s.

Our resulting code will look like this:

# Import the default logging module

import logging

# Format the log message

logging.basicConfig(format='%(asctime)s %(levelname)s:%(name)s:%(message)s')

# Emit a warning message

logging.warning('You are learning Python logging!')

The output should look similar to the following:

2022-11-11 11:11:51,994 WARNING:root:You are learning Python logging!

Creating a custom logger

What if we don’t want to use the root logger?

In that case, we can create our own logger by setting a logger = value and defining the settings of our logger (remember, basicConfig() is only for the root logger). For example, the script below creates a HumioDemoLogger set to log INFO-level messages with the same formatting as our previous example.

# Import the default logging module

import logging

# Create our demo logger

logger = logging.getLogger('HumioDemoLogger')

# Set a log level for the logger

logger.setLevel(logging.INFO)

# Create a console handler

handler = logging.StreamHandler()

# Set INFO level for handler

handler.setLevel(logging.INFO)

# Create a message format that matches earlier example

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

# Add our format to our handler

handler.setFormatter(formatter)

# Add our handler to our logger

logger.addHandler(handler)

# Emit an INFO-level message

logger.info('Python logging is cool!')

When you run the script, the output should look similar to the following:

2022-11-11 11:11:38,525 - HumioDemoLogger - INFO - Python logging is cool!

Python logging levels

If you’re familiar with the Syslog protocol, the idea of logging levels and log severity should be intuitive. In short, log messages generally include a severity that indicates the importance of the message.

There are six default severities with the default Python logging module. Each default severity is associated with a number, and a higher numeric value indicates a more severe logging level. The table below describes each of the default logging levels.

Default Python logging levels

| Level | Numeric Value | Description |

|---|---|---|

| Critical | 50 | Highest severity messages; may cause a program to crash or exit. |

| Error | 40 | High severity messages generally related to an operation failing that does not cause the program to crash or exit. |

| Warning | 30 | Potentially negative events that may cause abnormal operation or otherwise require attention (such as the use of a deprecated API). |

| Info | 20 | Messages that log standard/expected events. |

| Debug | 10 | Messages useful for debugging how an application is running. |

| Notset | 0 | Default level when a new logger is created. Setting the root logger to NOTSET logs all messages. For other loggers, NOTSET messages are delegated to parent loggers until a level other than NOTSET is found. |

Log your data with CrowdStrike Falcon Next-Gen SIEM

Elevate your cybersecurity with the CrowdStrike Falcon® platform, the premier AI-native platform for SIEM and log management. Experience security logging at a petabyte scale, choosing between cloud-native or self-hosted deployment options. Log your data with a powerful, index-free architecture, without bottlenecks, allowing threat hunting with over 1 PB of data ingestion per day. Ensure real-time search capabilities to outpace adversaries, achieving sub-second latency for complex queries. Benefit from 360-degree visibility, consolidating data to break down silos and enabling security, IT, and DevOps teams to hunt threats, monitor performance, and ensure compliance seamlessly across 3 billion events in less than 1 second.